Sometimes, your Google Search Console says that Googlebot cannot access CSS and JS files anymore. For a perfect understanding, it is a must for Googlebot to fetch Javascript and CSS files. If Google couldn’t fetch CSS and Javascript files, then it will show an error in Google Search Console. To get rank first in Google, basic consolidation goes to Google fetch and crawls. It’s better to say user-friendly customized websites. WordPress, one of the best content management platform helps to fetch websites easily without blocking.

If possibly adding security plugins in WordPress or by adding security measures it may block JS files and CSS files automatically. We still talk about CSS and JS files are blocked to fetch what makes it block easily? It’s because of the robots.txt or .htaccess files which block CSS or JS files from Google. The result might lead to poor performance of SEO.

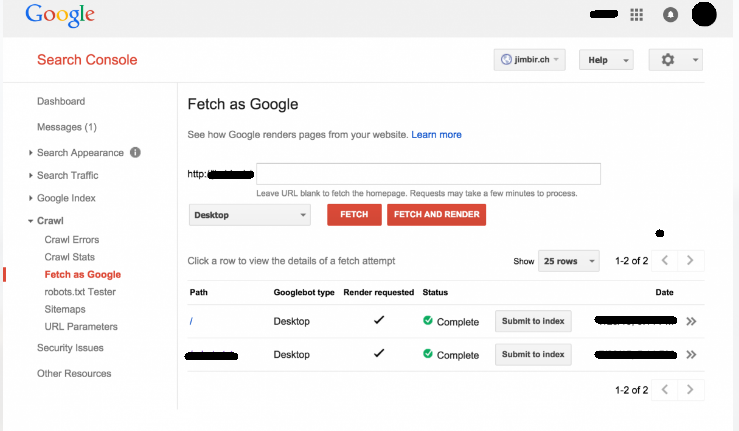

Step 1: Make sure that the website’s static resource should not be blocked. Go to Google Search Console and then select the option “Crawl” to tap on Fetch as Google.

Step 2: Don’t forget to tap on the button “render”! Check the result row-wise which shows how user views and Googlebot views the website.

Step 3: If Googlebot gets differences then your CSS or JS files are not accessible to fetch.

If you want to find the resource blocked, then go directly to Google Index and then select Blocked resources. Each link will be displayed in front of you by saying Googlebot can’t access it. It might be because of the theme or Plugin we use in WordPress.

So, correct the robots.txt website content immediately for hiding those from Google bot view.

Step 1: To correct, get connected to the website by using FTP client. You can see it root directory of our website.

Step 2: In the Yoast SEO plugin, go to the option “SEO” and then select the page of “tools”. Tap on the option “File Editor”.

Here’s the WordPress directories disallowed status:

Check the frontend for analyzing the blockage of CSS and JS files. Go through fully the folders of themes or plugins. Just do the removal on wp-includes!

Also, check on the robots.txt file for deep analysis. It may contain information or null space. If robots.txt doesn’t access by Googlebot then files are indexed automatically.

In default, hosting providers will give security measures to block accessing the bot folders in WordPress.

Do override by making it easy:

Save all the changes!

Finally, you have completed it all in one program module. Rank your website first in Google!

If possibly adding security plugins in WordPress or by adding security measures it may block JS files and CSS files automatically. We still talk about CSS and JS files are blocked to fetch what makes it block easily? It’s because of the robots.txt or .htaccess files which block CSS or JS files from Google. The result might lead to poor performance of SEO.

Step 1: Make sure that the website’s static resource should not be blocked. Go to Google Search Console and then select the option “Crawl” to tap on Fetch as Google.

Step 2: Don’t forget to tap on the button “render”! Check the result row-wise which shows how user views and Googlebot views the website.

Step 3: If Googlebot gets differences then your CSS or JS files are not accessible to fetch.

If you want to find the resource blocked, then go directly to Google Index and then select Blocked resources. Each link will be displayed in front of you by saying Googlebot can’t access it. It might be because of the theme or Plugin we use in WordPress.

So, correct the robots.txt website content immediately for hiding those from Google bot view.

Step 1: To correct, get connected to the website by using FTP client. You can see it root directory of our website.

Step 2: In the Yoast SEO plugin, go to the option “SEO” and then select the page of “tools”. Tap on the option “File Editor”.

Here’s the WordPress directories disallowed status:

Code:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /wp-content/plugins/

Disallow: /wp-content/themes/Also, check on the robots.txt file for deep analysis. It may contain information or null space. If robots.txt doesn’t access by Googlebot then files are indexed automatically.

In default, hosting providers will give security measures to block accessing the bot folders in WordPress.

Do override by making it easy:

Code:

User-agent: *

Allow: /wp-includes/js/Finally, you have completed it all in one program module. Rank your website first in Google!